While the technology used to create deep fakes is relatively new technology, it is advancing quickly and it is becoming more and more difficult to check if a video is real or not. These are features like the corners of your eyes and mouth, your nostrils, and the contour of your jawline. The technology used to create Deep Fakes is programmed to map faces according to “landmark” points. GAN can be used to generate new audio from existing audio, or new text from existing text – it is a multi-use technology. For example a GAN can look at thousands of photos of Beyonce and produce a new image that approximates those photos without being an exact copy of any one of the photos.

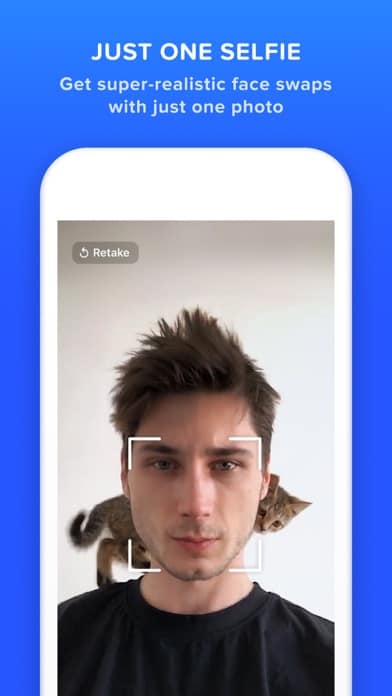

Fake videos can be created using a machine learning technique called a “generative adversarial network” or GAN. Deep Fakes are similar but much more realistic. The basic concept behind the technology is facial recognition, users of Snapchat will be familiar with the face swap or filters functions which apply transformations or augment your facial features. Deep fakes differ from other forms of false information by being very difficult to identify as false. Deepfakes are computer-created artificial videos in which images are combined to create new footage that depicts events, statements or action that never actually happened. For example, I use this little video to welcome my students to class each term.ĭo you want to learn more about how it works? Aliaksandr posted a very good video explaining that here.Deepfakes are fake videos created using digital software, machine learning and face swapping. With a bit of post-processing, you can make your own short deepfake video. In our Google co-lab, all you need to do is upload a folder with the target image, upload your own video (source) to your google drive, and run the co-lab notebook block-by-block.

#Create deep fake code

To explain how deepfakes work, we created a simple Google co-lab for you that builds on the initial code from Aliaksandr Siarohin - who (no surprise here) - works for Snap. Since a video is a collection of images (frames), placed one after the other, this neural network allows us to photoshop each frame in a video. The computer automatically maps the right parts of one’s source images onto the destination image. The initial neural network is trained on many hours of real video footage of people to help the AI recognize various important features of the person’s face - such as the eyes, upper and lower lips, teeth, ears, eyebrows, an outline of the face, etc. The underlying AI detects facial expressions, eye movements, and head position - which are then superimposed on either a unicorn, a potato, or whatever Snap-Filter is en-vogue. Anyone who has used Snap will have used such an approach.

#Create deep fake full

See full article: Thanh Thi Nguyen First-Order Motion ModelĪ slightly different approach for deepfakes is to replace the encoder with a motion model. Next, the decoder will revert this information back into an image of a “2 “based on how the computer imagines it. After the encoding stage, the computer stores the information or latent image for a number this is just the information “this is a two”. Take a look at Dall-E 2 to see how powerful this process can be.īelow is the process of encoding and decoding an image of a “2”. Once the computer has encoded many similar images (like images from a cat etc.), it can reverse this process, and go from “cat” to an image. How? By using the knowledge stored in your brain. Our brain has created a connection between the image and the encoded-word “cat.” How do we know that a given object is a cat? Well, we have seen many cats.

We see a cat, and we use the word (e.g., representation) “CAT” for it.

Going from an original image to a latent image is called encoding. Or said differently, what you see in those layers (if you were to open up the Deep Neural Network Black Box) is no longer the reality that one can observe, but rather an inferred state based on the mathematical model of the AI layers before. Each layer represents a mathematical abstraction of the prior layer. Encoder-decoder pairsĭeep Learning models have different layers. Rather, they leverage either encoder-decoder pairs or first-order motion models. This is pretty cool! Today, though, most tools for deepfakes are not using GANs. This way, the two neural networks train each other and become more and more realistic. Essentially the second neural network is checking whether the image of the first network is real or fake. The second neural network - called the discriminative classifier - checks the first, generative neural network. Now you’re thinking: What about the other neural network set up by GAN? I’m glad you ask - this is where the “adversarial” component comes in.

0 kommentar(er)

0 kommentar(er)